Under increasing scrutiny and pressure from activists and concerned parents, OpenAI has announced the formation of a new team dedicated to studying ways to safeguard its AI tools from potential misuse or abuse by children.

A recent job listing on OpenAI’s career page unveiled the creation of a Child Safety team tasked with collaborating with platform policy, legal, and investigations groups internally and external partners to manage processes, incidents, and reviews concerning underage users.

The company is actively seeking a child safety enforcement specialist to enforce OpenAI’s policies in the context of AI-generated content and oversee review processes related to sensitive content, particularly content relevant to children.

Given the regulatory landscape, large tech vendors often allocate significant resources to comply with laws such as the U.S. Children’s Online Privacy Protection Rule, which impose controls on children’s online access and data collection practices. Therefore, OpenAI’s initiative to hire child safety experts aligns with industry standards, especially as the company anticipates a potentially significant underage user base in the future. (OpenAI’s current terms of use mandate parental consent for children aged 13 to 18 and prohibit use for those under 13.)

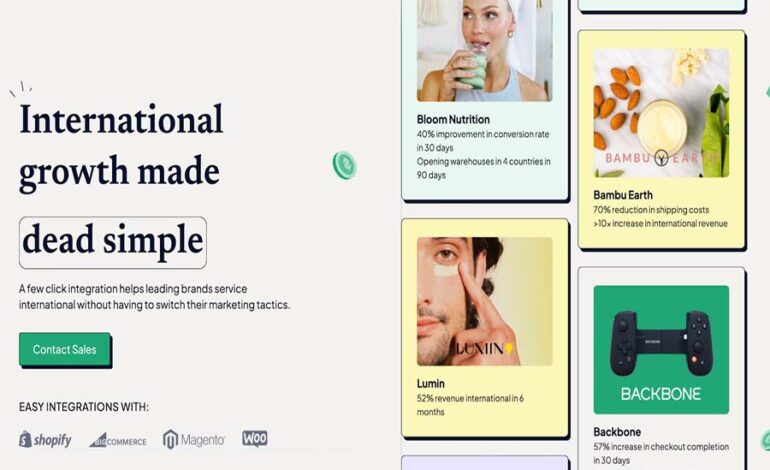

The establishment of the Child Safety team, following OpenAI’s recent partnership with Common Sense Media to develop kid-friendly AI guidelines and secure its first education customer, reflects the company’s proactive approach to addressing concerns about minors’ use of AI tools and avoiding negative publicity.

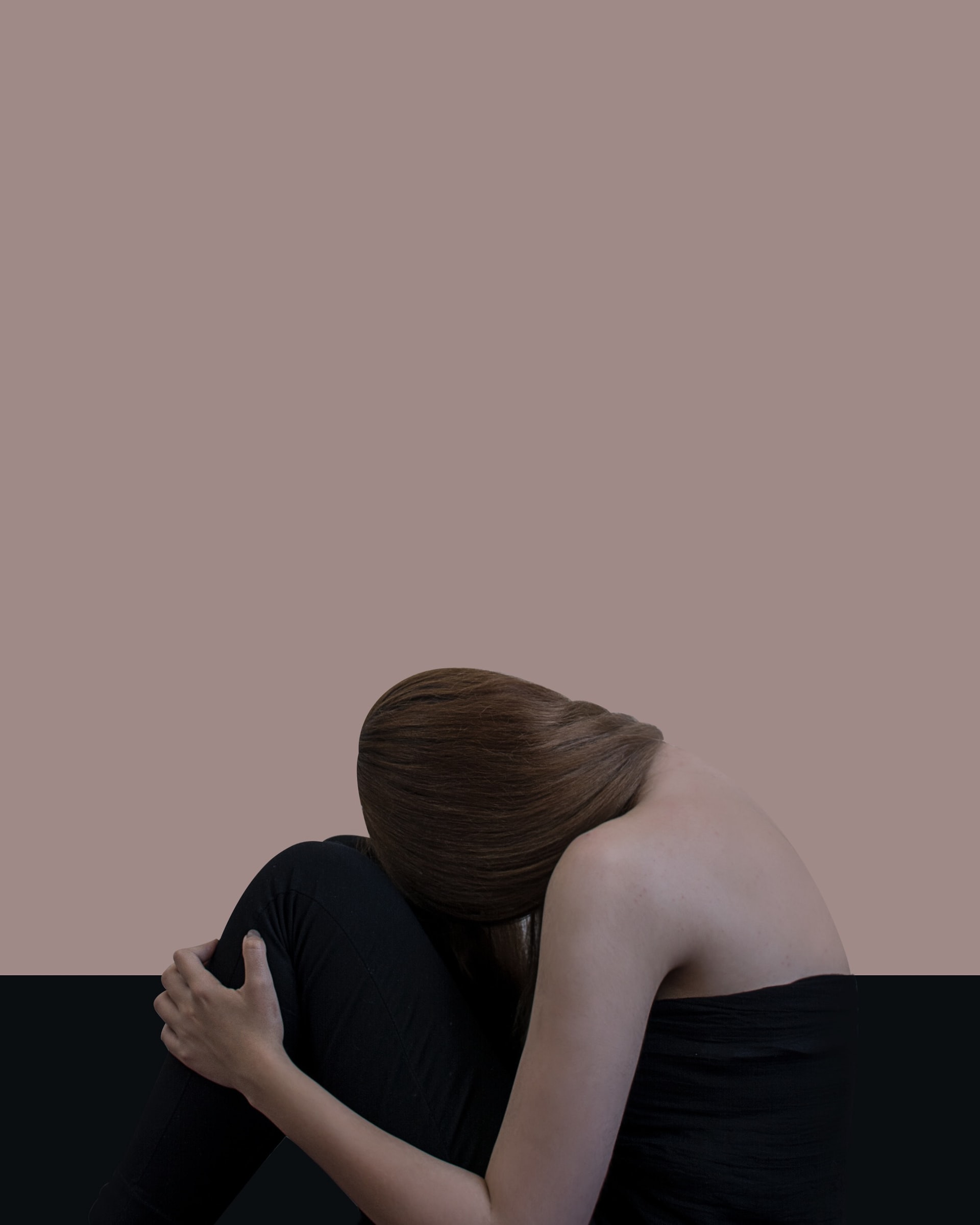

As children and teenagers increasingly turn to AI-powered tools for academic assistance and personal support, concerns about potential risks have surfaced. Reports indicate that a significant percentage of kids have used AI tools like ChatGPT to address issues ranging from mental health to family conflicts.

While some view AI as a beneficial resource, others highlight the risks associated with its use, including plagiarism, misinformation, and negative interactions. Instances of schools banning ChatGPT due to such concerns have raised awareness about the need for guidelines and regulations governing minors’ access to AI technology.

Recognizing the importance of responsible AI usage, OpenAI has provided documentation and guidance for educators on incorporating AI tools like ChatGPT into classrooms while exercising caution regarding inappropriate content. Additionally, global organizations like UNESCO have emphasized the necessity of government regulations to ensure the safe and ethical use of AI in education.

As OpenAI continues to navigate the evolving landscape of AI technology, the establishment of its Child Safety team underscores the company’s commitment to prioritizing the safety and well-being of its users, particularly children and adolescents.